How to pass Salesforce Certified Data Architecture and Management Designer Exam

This exam was 1st architect exam for me.

Its not that much easy if we compare it with Sharing and Visibility Exam.

But if you do good preparation and practise and study all topics mentioned in resource guides,

then you can easily crack it. Good understanding of data and security/sharing model in an LDV (large data volume) environment

and best practices around LDV migration are required for preparing for the exam. Following are most important topics:

There are a number of questions concerning that issue. Basically when you hit 2 million records threshold we can start talking about LDV. There are some areas that may be affected by such an amount of records:

To have a better overview of the topic I strongly recommend going through Salesforce’s Best Practices for Deployments with Large Data Volumes ebook.

There is a nice chapter devoted to the database architecture. It’s quite eye opening in terms of how data is stored, searched and deleted in Salesforce:

There are some key challenges connected with LDV:

The platform automatically maintains indexes on the following fields for most objects.

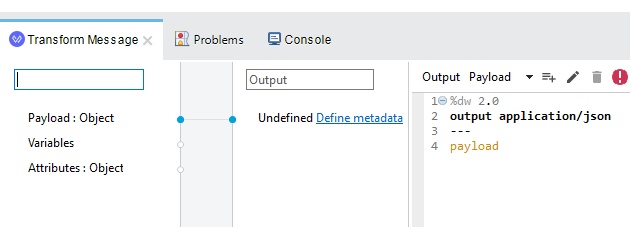

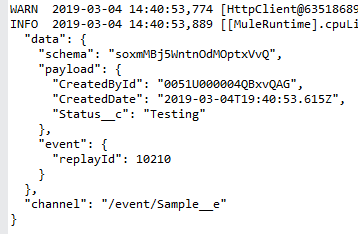

Behind the scenes Bulk API uploads the data into

temporary tables then executes processing of the data (actual load into

target objects) using parallel asynchronous processes:

As mentioned in the LDV section you have to keep in mind few things when uploading data:

Other imporant terms:

This exam was 1st architect exam for me.

Its not that much easy if we compare it with Sharing and Visibility Exam.

But if you do good preparation and practise and study all topics mentioned in resource guides,

then you can easily crack it. Good understanding of data and security/sharing model in an LDV (large data volume) environment

and best practices around LDV migration are required for preparing for the exam. Following are most important topics:

- Large Data Volumes (LDV)

- Skinny Tables

- Indexes

- Data Loading(LDV)

- Data Quality

Large Data Volumes (LDV)

There are a number of questions concerning that issue. Basically when you hit 2 million records threshold we can start talking about LDV. There are some areas that may be affected by such an amount of records:

- Reports

- Search

- Listviews

- SOQL

To have a better overview of the topic I strongly recommend going through Salesforce’s Best Practices for Deployments with Large Data Volumes ebook.

There is a nice chapter devoted to the database architecture. It’s quite eye opening in terms of how data is stored, searched and deleted in Salesforce:

There are some key challenges connected with LDV:

- Data Skew – each record shouldn’t have more than 10k children; data should be even distributed

- Sharing Calculation Time – one can defer sharing calculation when loading big chunks of data into system

- Upsert Performance – better to seperately insert and then update records; upsert is quite expensive operation

- Report Timeouts

- Apply selective report filtering

- Non-Selective Queries (Query Optimization)

- Make query more selective: reduce the number of objects and fields used in a query

- Custom Indexes

- Avoid NULL values (these are not indexed)

- PK Chunking Mechanisms

- Data Reduction Considerations:

- Archiving – consider off-platform archiving solutions

- Data Warehouse – consider a data warehouse for analytics

- Mashups – real-time data loading and integration at the UI level (using some VF page)

Skinny Tables

Skinny tables are quite an interesting concept that I was not aware of before.Salesforce creates skinny tables to contain frequently used fields and to avoid joins, and it keeps the skinny tables in sync with their source tables when the source tables are modified. To enable skinny tables, contact Salesforce Customer Support. For each object table, Salesforce maintains other, separate tables at the database level for standard and custom fields. This separation ordinarily necessitates a join when a query contains both kinds of fields. A skinny table contains both kinds of fields and does not include soft-deleted records. This table shows an Account view, a corresponding database table, and a skinny table that would speed up Account queries.

Indexes

Indexes Salesforce supports custom indexes to speed up queries, and you can create custom indexes by contacting Salesforce Customer Support.The platform automatically maintains indexes on the following fields for most objects.

- RecordTypeId

- Division

- CreatedDate

- Systemmodstamp (LastModifiedDate)

- Name

- Email (for contacts and leads)

- Foreign key relationships (lookups and master-detail)

- The unique Salesforce record ID, which is the primary key for each object

- multi-select picklists

- text area (long)

- text area (rich)

- non-deterministic formula fields (like ones using TODAY or NOW)

- encrypted text fields.

- Auto Number

- Number

- Text

Data Loading

You have to know ways to integrate Salesforce with data from external systems:- ETL Tools

- SFDC Data Import Wizard

- Data Loader

- Outbound Messages

- SOAP and REST API

Bulk API is based on REST principles and is optimized for loading or deleting large sets of data. You can use it to query, insert, update, upsert, or delete many records asynchronously by submitting batches. Salesforce processes batches in the background.Interesting fact is that Data Loader can also utilize Bulk API. You just have to explicitly switch it on in the settings:

Source: https://developer.salesforce.com/page/Loading_Large_Data_Sets_with_the_Force.com_Bulk_API

As mentioned in the LDV section you have to keep in mind few things when uploading data:

- Disable triggers and workflows

- Defer calculation of sharing rules

- Insert + update is faster than upsert

- Group and sequence data to avoid parent record locking

- Tune the batch size (HTTP keepalives, GZIP compression)

Data Quality

There is some nice overview from Salesforce – 6 steps toward top data quality:- Use exception reports and data-quality dashboards to remind users when their Accounts and Contacts are incorrect or incomplete. Scheduling a Dashboard Refresh and sending that information to managers is a great way to encourage compliance

- When designing your integration, evaluate your business applications to determine which one will serve as your system of record (or “master”) for the synchronization process. The system of record can be a different system for different business processes

- Use Workflow, Validation Rules, and Force.com code (Apex) to enforce critical business processes

- Use in-built Salesforce Duplicate Rules and Matching Rules mechanisms

Other imporant terms:

- Data Governance – refers to the overall management of the availability, usability, integrity, and security of the data employed in an enterprise. A sound data governance program includes a governing body or council, a defined set of procedures, and a plan to execute those procedures

- Data Stewardship – management and oversight of an organization’s data assets to help provide business users with high-quality data that is easily accessible in a consistent manner